Apache Spark is a Big Data cluster computing framework that can run on Standalone, Hadoop, Kubernetes, Mesos clusters, or in the cloud. We can read and write data from various data sources using Spark. For example, we can use CSV (comma-separated values), and TSV (tab-separated values) files as an input source to a Spark application. So, here we will discuss how we can read a CSV file with a newline character in a data frame using PySpark. We will be using the default options available in Spark to read a CSV file containing newline characters as values. It can help us to avoid any additional processing needed to remove these newline characters from the file. That is to say, it helps us to read the original column values as we don’t need to replace the newline characters with any other character.

Suppose, we have a CSV file and that file contains some newline characters in the column values. Also, we are using the \n (default row separator) as the row separator. Now, if we will read this file in the Spark, these newline characters will be parsed as a row separator and will start breaking a single row into multiple rows. Therefore, we will start getting the incorrect column values.

Read CSV file with Newline character in PySpark without “multiline = true” option

Below is the sample CSV file with 5 columns and 5 rows. However, the address column contains newline characters in it. Therefore, it will break the rows in between. Also, please notice the double-quote symbols used as a text qualifier in this file.

empid,empname,dob,adddress,contact

1,"Adam",19800101,"New Delhi,

India",1234567890

2,"David",19810109,"Kolkata India",9876543210

3,"Smith",19751210,"Mumbai India",1234567809

4,"Kevin",19810109,"Chennai,

India",1234567009

5,"Andrew",19810109,"New Delhi, India",1234567090

If we will open this file in excel or any CSV editor, we will get the below output.

Let’s try to read the above file without using the “multiline = true” property in PySpark. We can use this code block to read the above file in PySpark.

df = spark.read.option("delimiter",",").option("header","true").csv("hdfs:///user/myuser/Sample_CSV_File_Multiline_Spark.csv")

#Show top 10 data frame rows

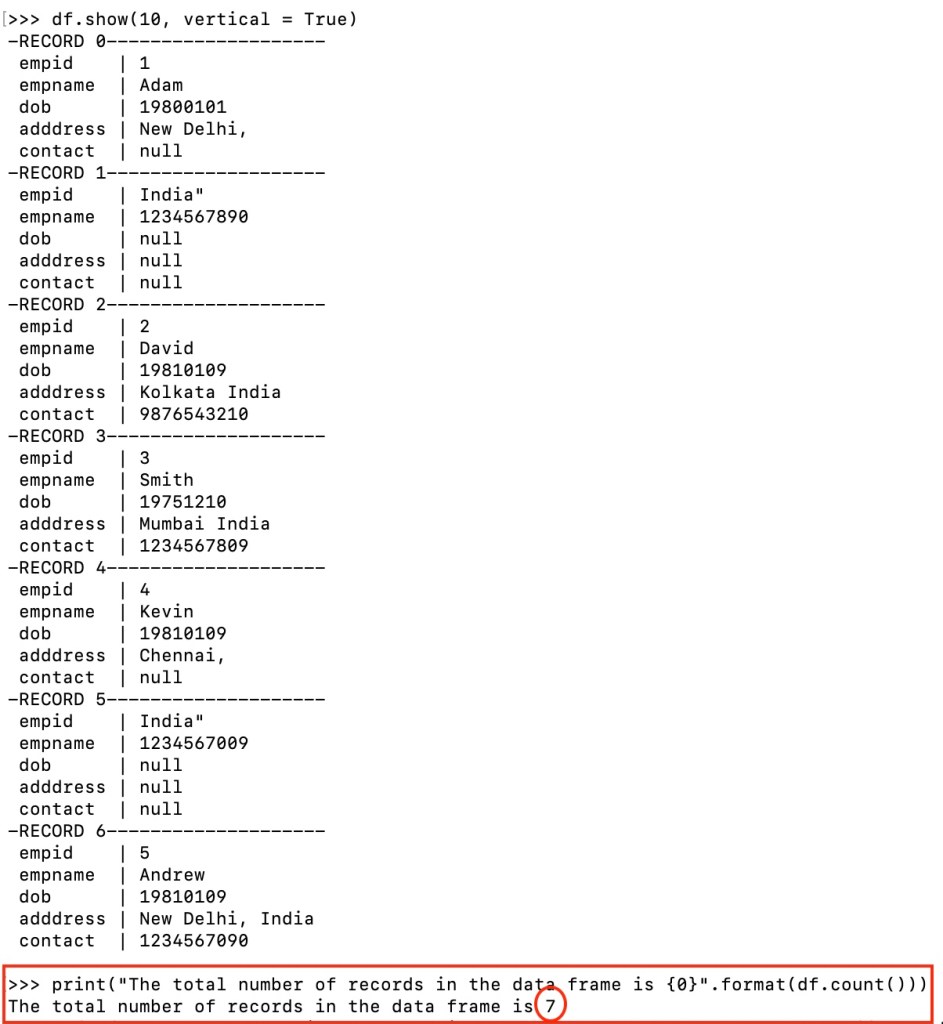

df.show(10, vertical = True)

#Get total row count in the data frame

print("The total number of records in the data frame is {0}".format(df.count()))

Output

We can see that we are getting incorrect column values because of these newline characters. The above PySpark code is not able to parse these newline characters effectively. Therefore, we are getting 7 rows instead of 5 rows. The above code handles these newline characters as row splitters rather than simple column values. As a result, a single row gets split into multiple rows. However, Spark provides an option to parse these newline characters efficiently.

Read CSV file with Newline character in PySpark with “multiline = true” option

Now, let’s try reading the same file with “multiline = true” property available in Spark. This is the code to read this file correctly in PySpark.

df_multiline = spark.read.option("delimiter",",").option("header","true").option("multiline", "true").csv("hdfs:///user/myuser/Sample_CSV_File_Multiline_Spark.csv")

#Show top 10 data frame rows

df_multiline.show(10, vertical = True)

#Get total row count in the data frame

print("The total number of records in the data frame is {0}".format(df_multiline.count()))

Output

In the above image, we can see that the “multiline = true” property helped us to read this file correctly. This feature of Spark is available out of the box and can be used without adding any additional jar files. Also, this helps to avoid modifying the original values of the columns. That way, we can read the newline characters available inside the column values correctly.

Thanks for the reading. Please share your thoughts in the comment section.