In this post, we discussed how to fill a null value with the previous not-null value in a Spark Dataframe. We have also discussed how to extract the non-null values per group from a spark dataframe. Now, in this post, we will learn how to fill a null value with the next available not-null value in a spark dataframe using the forward-fill method. To demonstrate this with the help of an example, we will create a sample table with some dummy data using the Scala programming language. So, let’s create the sample data and table now.

//Let's create sample table tbl_empCTCDetail

spark.sql("""CREATE TABLE testdb.tbl_empCTCDetail

(

empName STRING,

dtYear INTEGER,

CTC_USD DECIMAL(18,2)

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

STORED AS TEXTFILE

LOCATION 'tbl_empCTCDetail/' """)

//Insert some sample data into the table

spark.sql("""INSERT INTO TABLE testdb.tbl_empCTCDetail

(empName, dtYear, CTC_USD)

SELECT 'Alex' AS empName, 2015 AS dtYear, 50000 AS CTC_USD

UNION ALL SELECT 'Alex' AS empName, 2016 AS dtYear, NULL AS CTC_USD

UNION ALL SELECT 'Alex' AS empName, 2017 AS dtYear, NULL AS CTC_USD

UNION ALL SELECT 'Alex' AS empName, 2018 AS dtYear, 60000 AS CTC_USD

UNION ALL SELECT 'Ken' AS empName, 2015 AS dtYear, 80000 AS CTC_USD

UNION ALL SELECT 'Ken' AS empName, 2016 AS dtYear, NULL AS CTC_USD

UNION ALL SELECT 'Ken' AS empName, 2017 AS dtYear, NULL AS CTC_USD

UNION ALL SELECT 'Ken' AS empName, 2018 AS dtYear, 90000 AS CTC_USD""")

//Now, verify the sample table and its data

spark.sql("SELECT * FROM testdb.tbl_empCTCDetail order by empName, dtYear").show(false)

Sample table and data

Problem statement

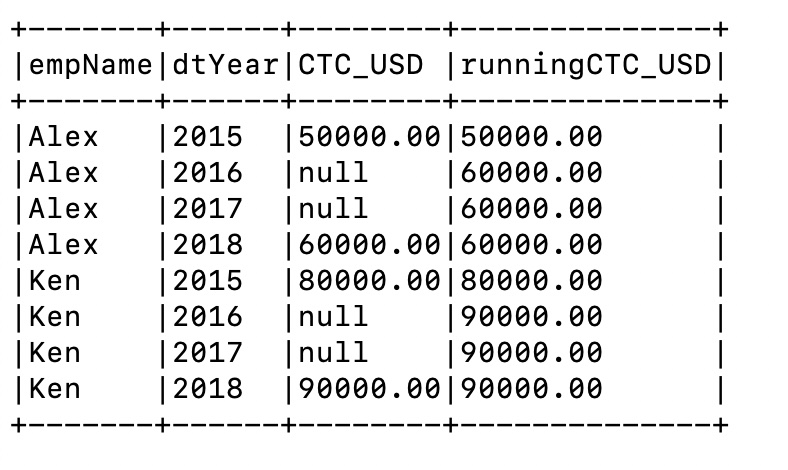

Now, suppose we want to replace the null values with the next available not-null value in the above dataframe. The sample data contains the employee name, year, and CTC value in USD. Now, we need to write a query to substitute the null values with the next year’s not-null CTC value. That means, in case the CTC value is NULL for any given year, we need to consider the next year’s CTC value instead of the NULL value to populate the resultset.

Query to fill null with the next not null value in a Spark dataframe

Below is the query that we can be used to replace a null value with the next available not null value for an employee.

import org.apache.spark.sql.functions.{last, col, when, expr}

import org.apache.spark.sql.expressions.Window

val df = spark.sql("SELECT * FROM testdb.tbl_empCTCDetail")

val window = Window.partitionBy("empName").orderBy(("dtYear")).rowsBetween(Window.currentRow, Window.unboundedFollowing)

df.withColumn("runningCTC_USD", when(col("CTC_USD").isNull, first("CTC_USD", ignoreNulls = true).over(window)).otherwise(col("CTC_USD"))).orderBy("empName", "dtYear").show(false)

Output

In the above query, firstly, we partitioned the data using the employee name column. As we need to pick the next available value for each employee, we have partitioned the data based on the employee column. Secondly, we have ordered the rows based on the dtYear column in asccending order. Finally, we have used “Window.currentRow, Window.unboundedFolowing” to extract the next year CTC. However, we have used “first(“CTC_USD”, ignoreNulls = true)” to consider only one value that is not a null value.

Thanks for the reading. Please share your inputs in the comment section.